Integrated A/B Testing

Focusing on the problem leads to efficiency, error reduction, and user delight.

The product team was initially approached by an internal client operations user with the request to add integrated “list splitting” into the platform. At the time, lists of email subscribers were being divided by a multi-step process that typically included exporting a file, splitting into smaller lists with a custom-built command line application on their computer, then re-uploading the new separate lists.

Defining the Problem

When I approached the user to get some more details, I learned that approximately 90% of list splits were being made to manually create A/B email tests. Less common use cases included delayed batch delivery or simple fixed-size truncation.

While a direct response to the request by simply split existing subscriber lists and integrated batching were designed and considered, the team decided that we could make a larger impact on efficiency and business by first directly integrating A/B testing into the system.

In addition time savings and a reduced opportunity for user error introduced by the need to manually create test and winning email versions, this change would potentially allow for an easy roll-up of overall behavioral reporting data, which previously required manual calculation and presentation.

Test Configuration

Based on competitor analysis and our own existing best practice documentation, recommended variables for email A/B testing typically included subject line, from name/address (known within the application as Sender Profile), message content, and deployment time. To most easily integrate into our standard operations workflow and client expectations, we decided to initially focus on just the first three, that is, "what is sent".

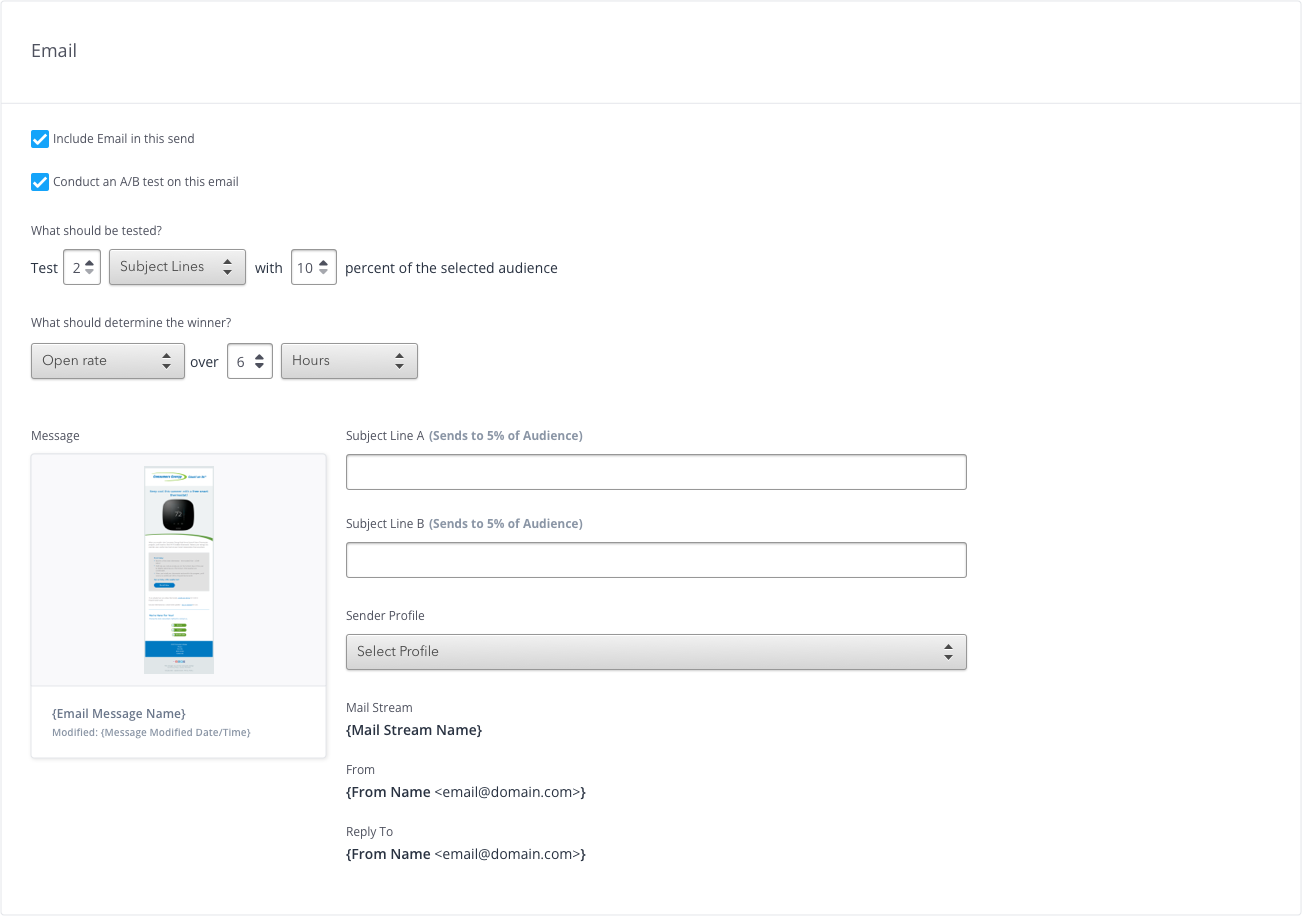

The next step was a the creation of a design that allowed the configuration of this new email send type. Rather than duplicating the entire standard email experience to include this single difference, I recommended adding a toggle to the existing configuration which would maintain existing learned user behavior of sent configuration while allowing for the optional addition of the control of A/B testing variables.

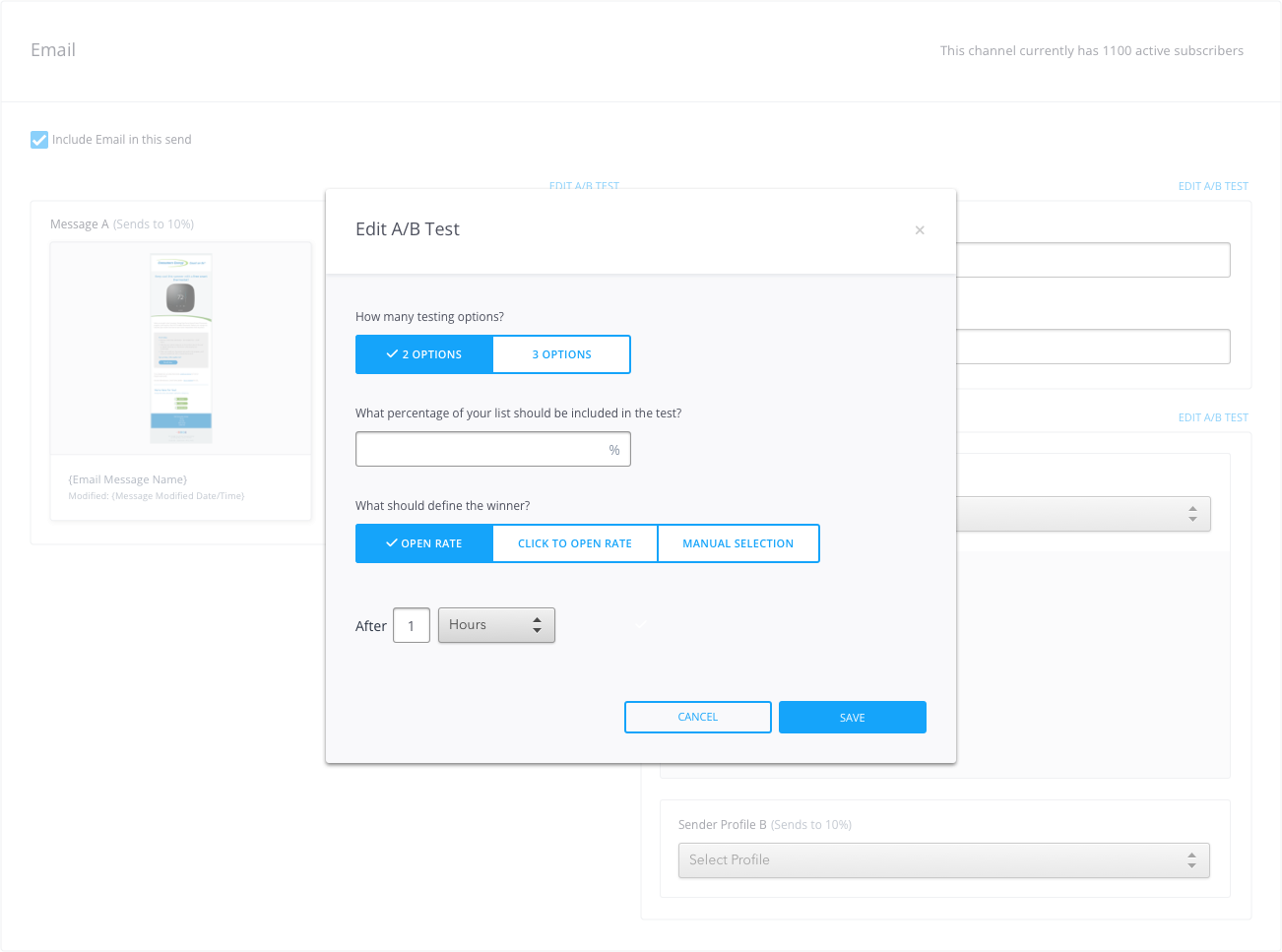

When A/B testing is enabled, controls are displayed, allowing the configuration of testing details, like test size, criteria, and duration along with the actual variables.

Both modal editing and inline controls were explored, but inline controls proved simpler and more consistent with other patterns.

I simultaneously progressed on the design of the other major steps in the email workflow: approval, scheduling and reporting.

Approval and Scheduling

A recently redesigned approval and scheduling flow provided a framework and starting point, but required some revision to support the display of both version A and B variables for each of the potential criteria. Because configuration, approval and scheduling tasks are typically performed by different users, it was important to highlight if a given send was an A/B test and clearly display the testing variables so the approval user can confirm them.

Multiple variations for a summary message were explored before settling on a style reminiscent of notification toasts used elsewhere in the application. Testing variables are shown both inline with the message to mimic the view of a recipient and in the metadata column where all send settings can be reviewed before scheduling.

Reporting

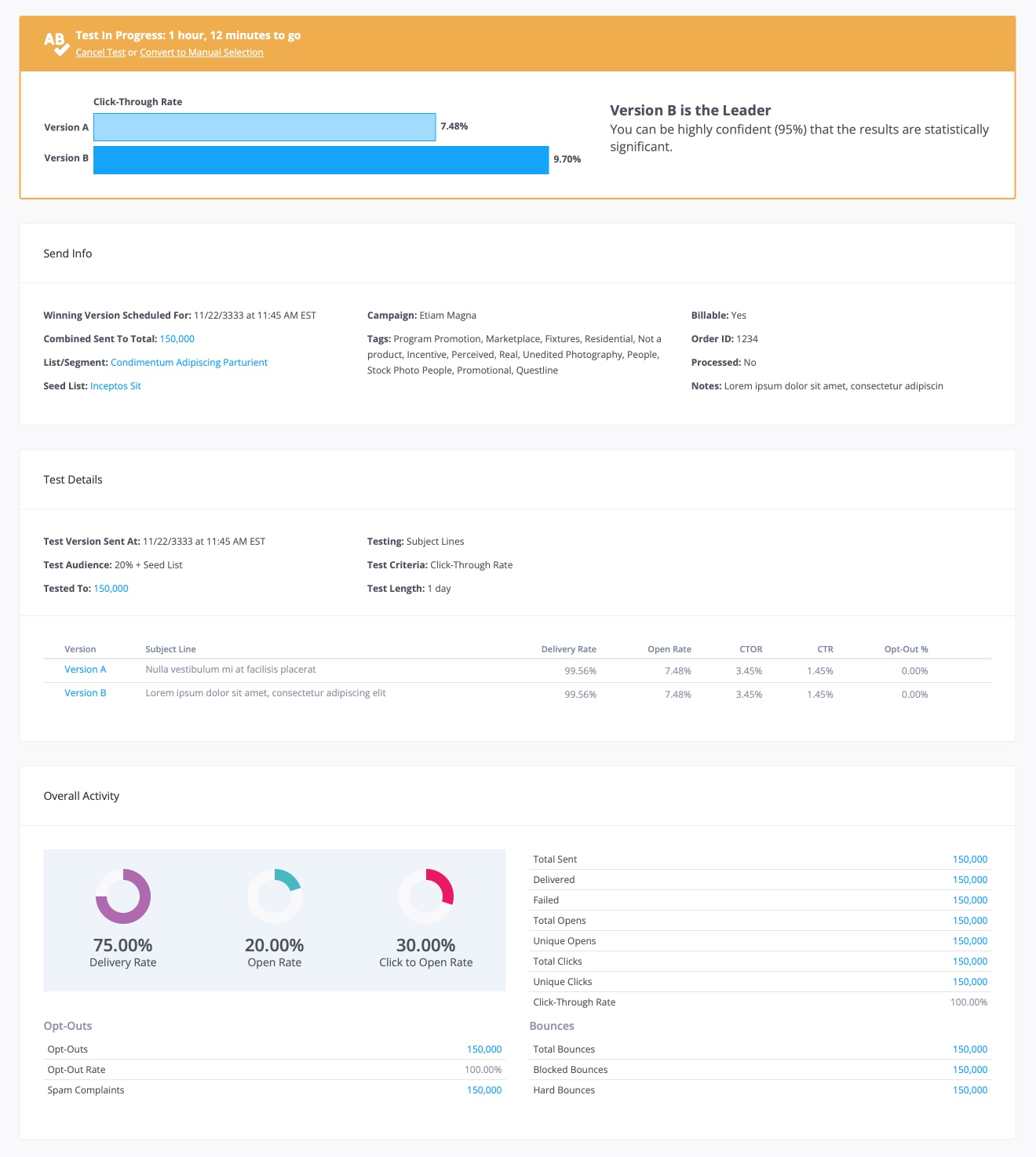

Often email configuration and scheduling are handled by the internal client operations team; email reporting is very often the primary interest of clients and internal account managers.

To maintain consistency of display location for behavioral reporting, it made sense to locate performance during the testing phase within the existing email reporting views. During that phase, data about the testing progress summary was given priority at the top of the page, with a direct comparison between the versions, the estimated level of confidence that the current winner would reflect the results of the full-behavior data was shown, along with a testing-specific panel reflecting all comparative behavior for each version.

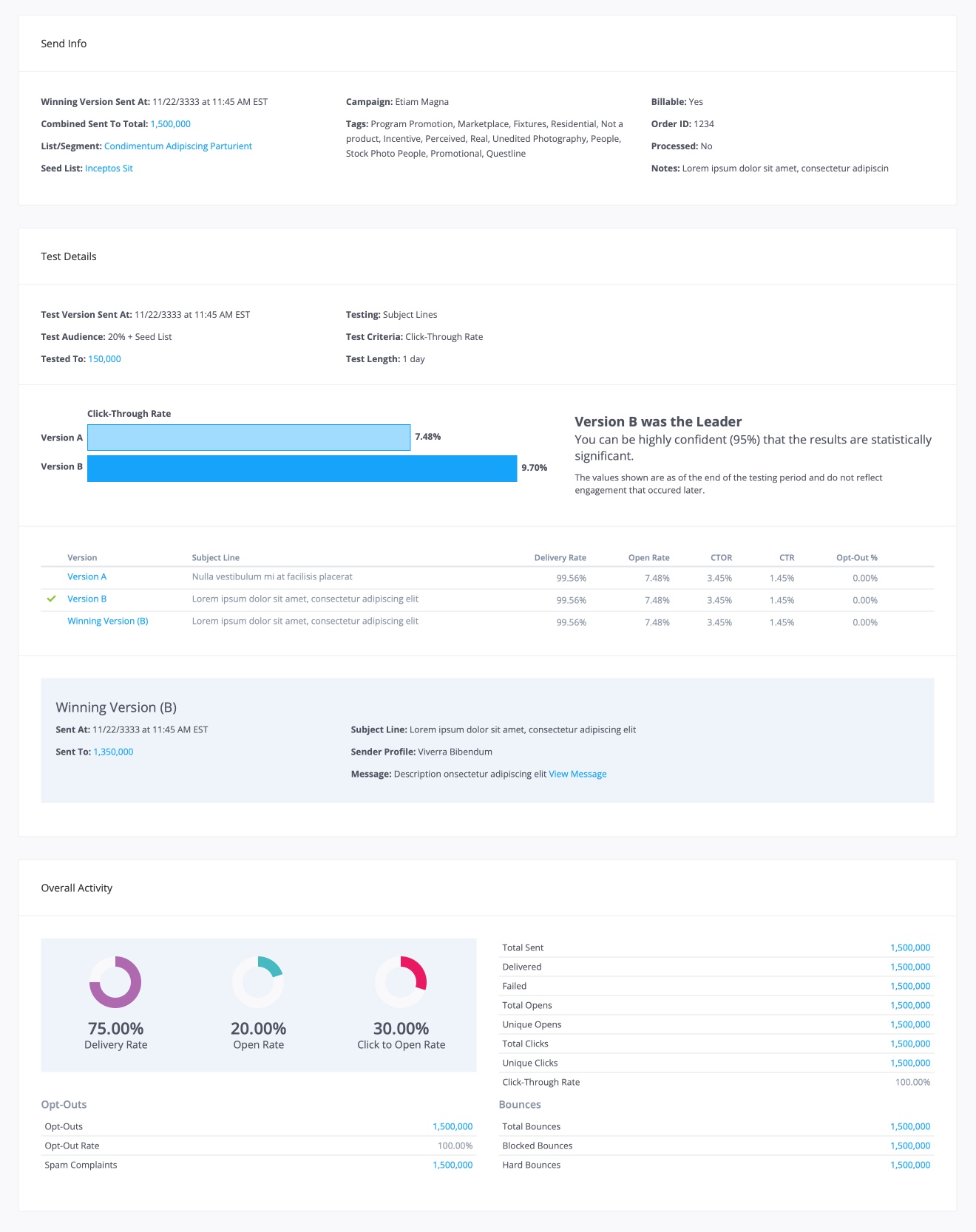

Once a winner is chosen – automatically at the end of the testing period, or manually – the remainder of the audience would receives the winning version. At this point, the testing summary is shifted within the testing panel to allow for easier comparison of the combined data with other sends. If needed, the data for individual versions is also always available.

Conclusion

While data of the full impact of directly integrating A/B testing into the application is not available, we can extrapolate potential gains. Significantly increased efficiency drives a reduction in client cost. If this reduction in cost encourages more clients to take advantage of A/B testing, overall send performance should improve, both in tested emails as well as others based on what is learned. Improved performance increases application value to clients which has the opportunity drive more business in the future.